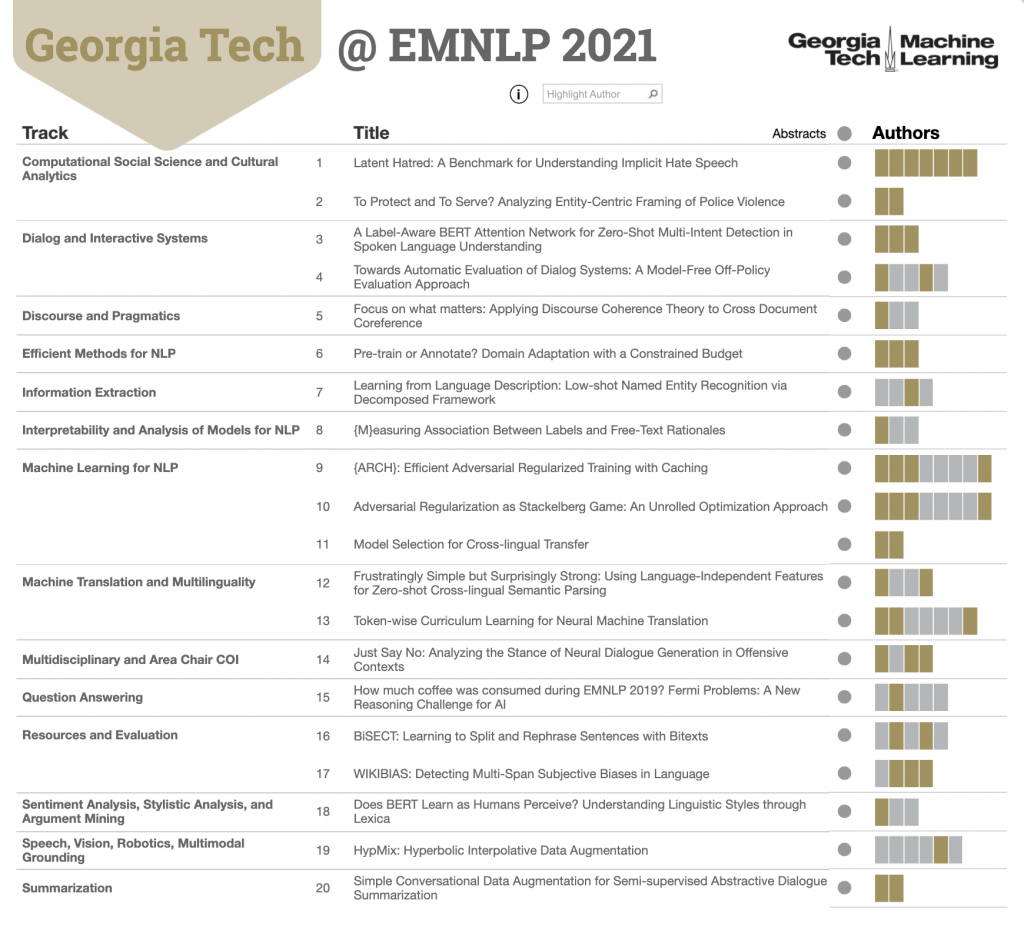

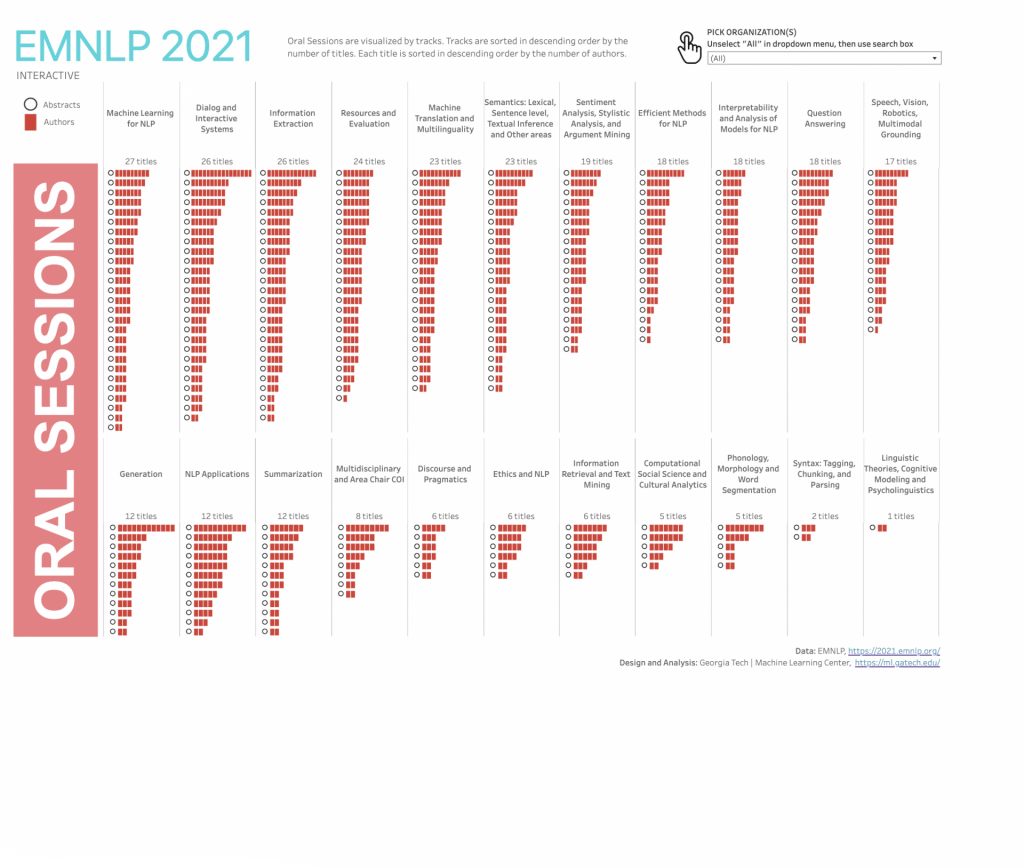

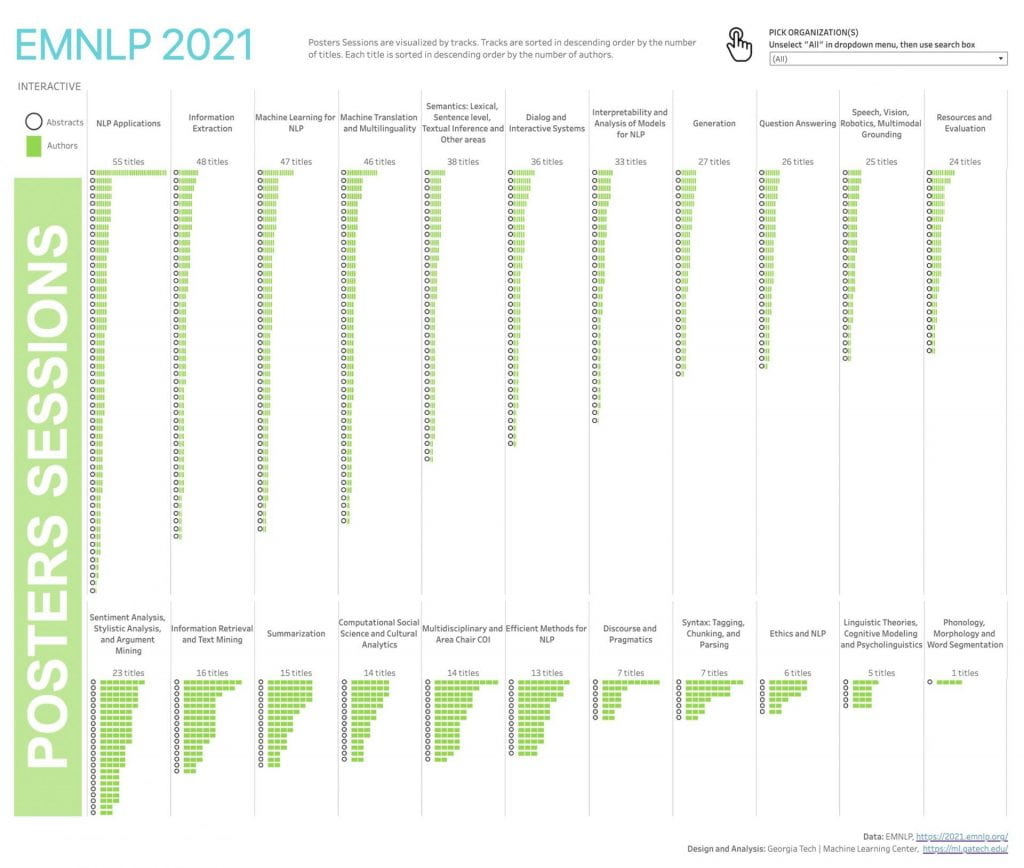

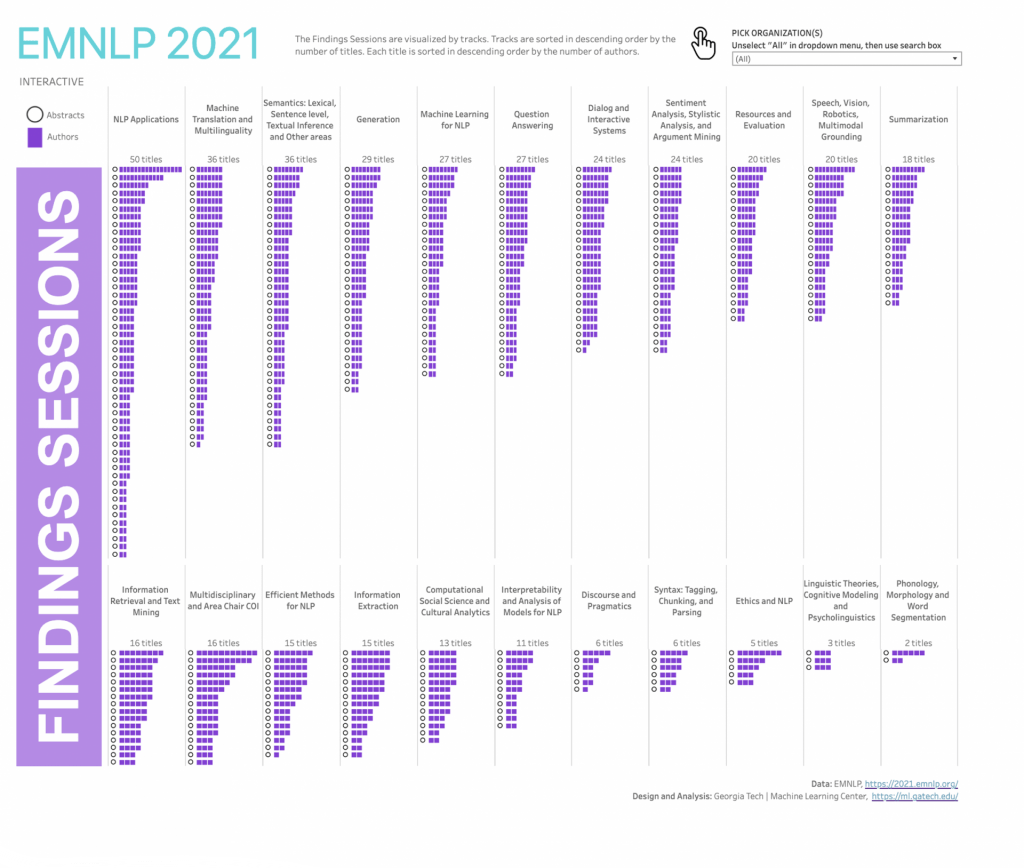

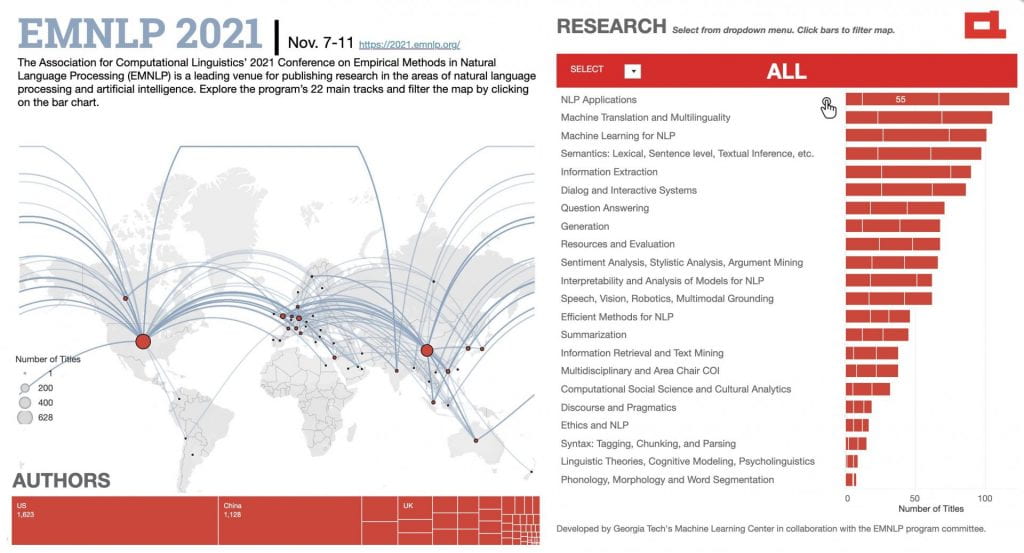

Georgia Tech research papers include natural language processing work from across the EMNLP program. Explore all the people and individual papers by clicking the data graphic. Georgia Tech’s 28 authors in the program contributed to 18 long papers and two short papers.

Main Content

Georgia Tech at EMNLP 2021

Welcome to Georgia Tech’s virtual experience for Empirical Methods in Natural Language Processing 2021 (EMNLP). Look around, explore, and discover insights into the institute’s research contributions at the conference, taking place Nov. 7-11. EMNLP is a leading venue for the areas of natural language processing and artificial intelligence. It provides researchers the opportunity to present and discuss their work on the latest contributions to the field. Our coverage includes trends from EMNLP’S entire papers program.

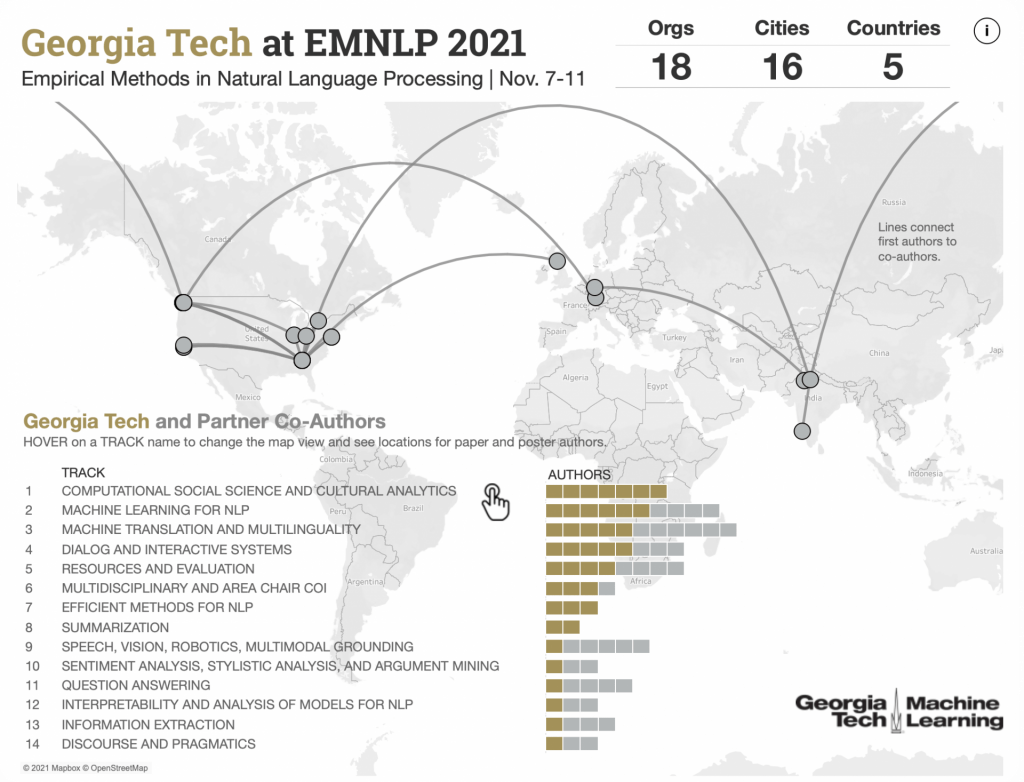

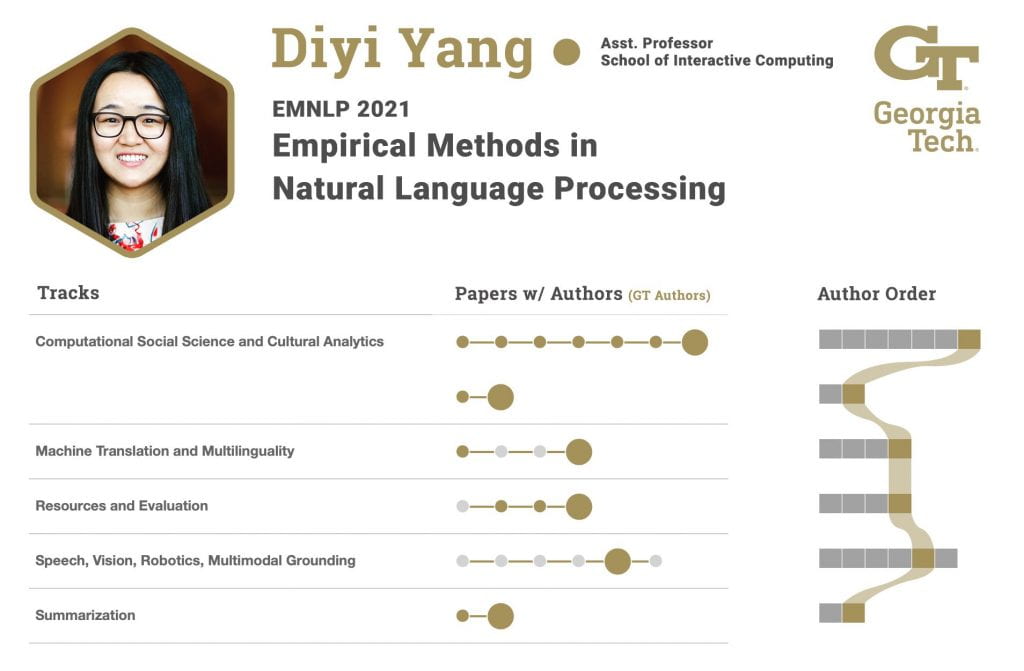

Georgia Tech authors have work represented in 14 of EMNLP’s 22 main research tracks. The map shows all authors from Georgia Tech and their external partners by track. The institute has seven authors in Computational Social Science and Cultural Analytics, where GT is strongest. Georgia Tech’s presence here includes the most authors from any organization, and all seven authors have oral papers, the highest tier of research in the program. Explore the map.

FACULTY FOCUS | Q&A with Experts at EMNLP

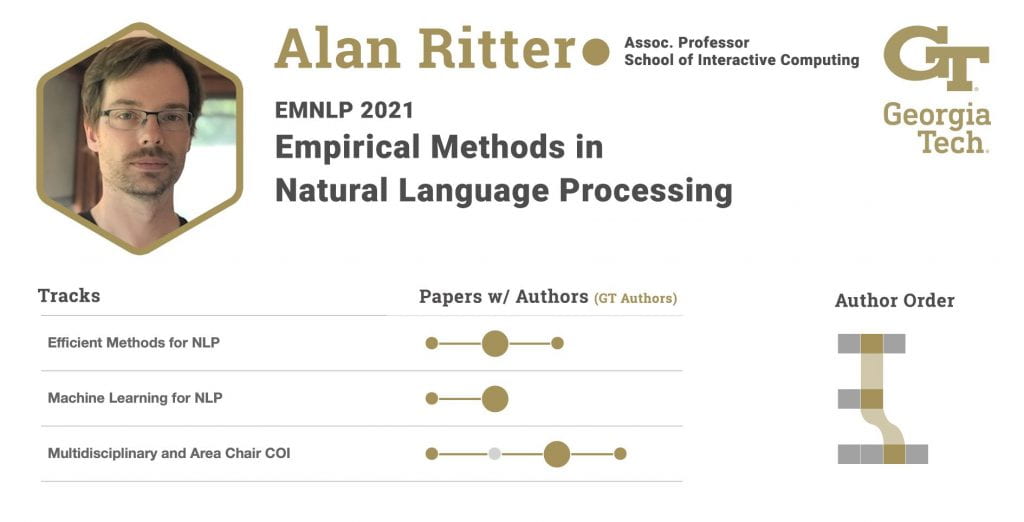

Alan Ritter, Associate Professor

School of Interactive Computing

If you had to pick, which of your research results accepted at EMNLP would you want to highlight?

I am excited about my Ph.D. student, Yang Chen’s paper “Model Selection for Cross-Lingual Transfer”. In this work, Yang addressed an important problem related to cross-lingual transfer learning, where the goal is to solve a natural language understanding task on a specific language, for example German, but the model only has access to supervised training data in English. Recently, pre-trained transformer models, such as multilingual BERT or XLM-RoBERTa have demonstrated surprisingly good performance, yet there is a lot of variance in performance on the target language between different fine-tuning runs. It is hard to know which model will work best, because in the zero-shot setting, you don’t have access to any labeled data in the target language. Our solution is a learned ranker that learns to predict which multilingual model will perform best on a given target language. This was found to work better than English development data, which is the standard method currently used in the literature.

What recent advancements or new challenges have you seen in the research areas that you are involved in?

One challenge is that pre-trained models, such as BERT, GPT, etc. are becoming more and more costly to train. In our paper “Pre-train or Annotate? Domain Adaptation with a Constrained Budget”, of my Ph.D. students, Fan Bai together with myself and professor Wei Xu investigated the costs of renting GPUs using a service such as Amazon EC2 or Google Cloud, and whether that money could have been better spent if it were instead allocated towards hiring annotators to simply label more supervised training data. We found that for small budgets, it is actually better to simply invest all your funding into data annotation, however as the available budget increases, a combination of both annotation and pre-training is the most economic strategy. This is a surprising result, because the conventional wisdom in the field has been that annotating data is expensive, so methods that can make use of unlabeled data, through pre-training or other methods, are more cost-effective.

If you were to focus on just one social application of your EMNLP work, what might it be?

In our paper “Just Say No: Analyzing the Stance of Neural Dialogue Generation in Offensive Contexts”, written by my Ph.D. student Ashutosh Baheti, together with myself, Maarten Sap and Mark Riedl, we showed that neural chatbots, such as DialoGPT or GPT-3 are two times more likely to agree with toxic comments made by users on Reddit. It appears these models have learned to imitate an echo-chamber effect that appears in the data they are trained on: users are less likely to reply to an offensive comment online, unless they agree with the author. There was a well-written article covering this paper on The Next Web.

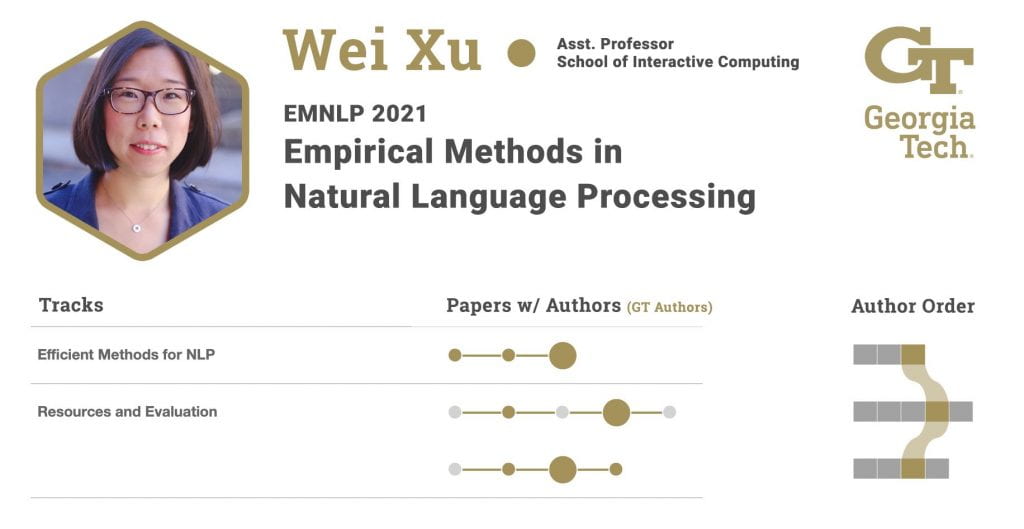

Wei Xu, Assistant Professor

School of Interactive Computing

If you had to pick, which of your research results accepted at EMNLP would you want to highlight?

Three papers happen to be on three different topics I am very excited about, in collaboration with different researchers. Some are pointing to a new direction, some are tackling a long-standing research problem:

- Natural language generation — “BiSECT: Learning to Split and Rephrase Sentences with Bitexts”

- Economics of pre-trained language models — “Pre-train or Annotate? Domain Adaptation with a Constrained Budget”

- Stylistics to reduce biases in language — “WIKIBIAS: Detecting Multi-Span Subjective Biases in Language”

What recent advancements or new challenges have you seen in the research areas that you are involved in?

It has been very exciting that large pre-trained language models, such as T5, have significantly improved natural language generation; but at the same time, the generated outputs are still far from good enough (e.g., with hallucinations, incapability to generate or recognize paraphrases) to be useful for real-world applications. My research group aims to develop a complete set solution with new machine learning models, dataset, and evaluation metrics.

If you were to focus on just one social application of your EMNLP work, what might it be?

Our work in the paper “BiSECT: Learning to Split and Rephrase Sentences with Bitexts” is developed for simplifying complex long sentences into shorter sentences to help people read. This particular NLP research topic is called “Text Simplification”, which has direct application to Accessibility and Education. We are interested in improving textual accessibility for all people. It can help school children to read news articles and STEM material, help the general public to read medical documents and government policies, help non-native speakers to read in their second language, and more.

Diyi Yang, Assistant Professor

School of Interactive Computing

If you had to pick, which of your research results accepted at EMNLP would you want to highlight?

I would pick “Latent Hatred”. Hate speech is pervasive in social media, causing serious consequences for victims of all demographics. Mai and Caleb’s work significantly advances our understanding of how implicit hate speech works today. Their work introduces a theoretically-justified taxonomy of implicit hate speech and a benchmark corpus with fine-grained labels to support research on detecting and explaining implicit hate speech. This interdisciplinary work is a collaboration between the School of Interactive Computing, and the Sam Nunn School of International Affairs within Georgia Tech.

What recent advancements or new challenges have you seen in the research areas that you are involved in?

Despite the increasing success of NLP, current language technologies often fail in low-resource data, language, and dialect settings, and still suffer from bias and fairness issues. My lab has been addressing these issues and we will continue working on this emerging direction of socially aware language technologies.

If you were to focus on just one social application of your EMNLP work, what might it be?

Automatic meeting notes-taker! We have been working on automatic conversation summarization by combining rich linguistic structures in conversations/meetings and large NLP models for a while, and The CODA that we are going to present at EMNLP will enable us to do such conversation summarization with limited supervision. With the rise of remote/video meetings since COVID-19, our automatic meeting note taker would benefit a lot of people.

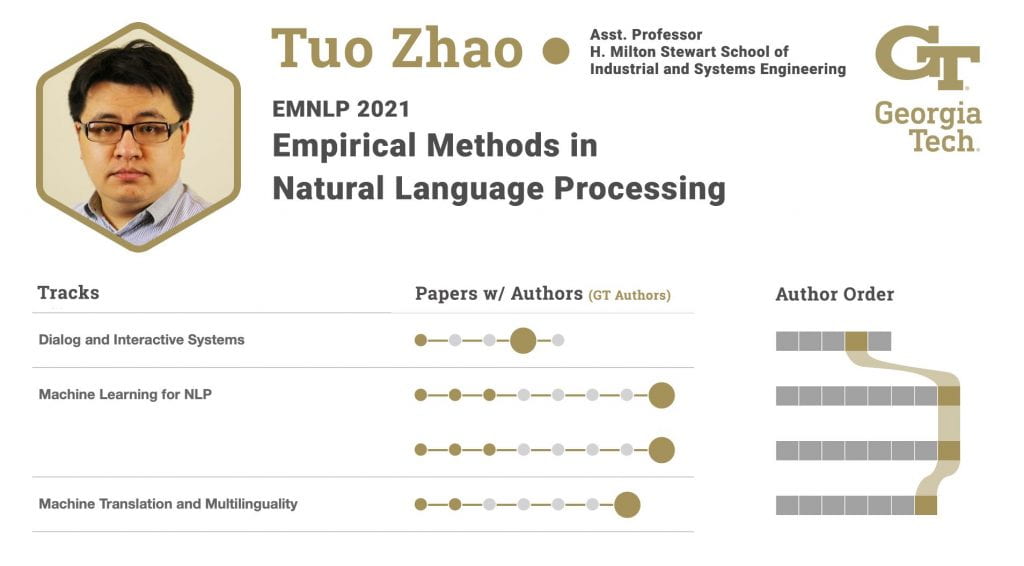

Tuo Zhao, Assistant Professor

H. Milton Stewart School of Industrial and Systems Engineering

If you had to pick, which of your research results accepted at EMNLP would you want to highlight?

I would highlight our result on automated evaluation of dialogue system. Dialogue system is one of the most fundamental and important problems in Natural Language Processing. However, an ideal environment for evaluating dialog agents, i.e., the Turing test, needs to involve human interaction, which is not affordable for large-scale experiments. Our EMNLP 2021 paper (arxiv.org/abs/2102.10242) proposes a new reinforcement learning framework – ENIGMA – for automating the Turing test. ENIGMA adopts an off-policy approach and only requires a handful of pre-collected experience data. Therefore, the evaluation does not involve human interaction with the target agent during the evaluation, making automated evaluations feasible. We believe that our proposed framework can serve as a fundamental building block to evaluation of dialogue systems and will motivate more sophisticated and stronger follow-up work on the automated Turing test.

What recent advancements or new challenges have you seen in the research areas that you are involved in?

The ultra-large neural language models (NLMs) are undoubtedly one of the most important breakthroughs in natural language processing in the past few years. They have significantly advanced research on natural language understanding, generation, etc. Despite the huge success, most of the efforts were only devoted to developing ultra-large models, and the importance of the training algorithms were less recognized. As a result, these large models are becoming more and more parameter inefficient. Making a model 10 times larger only leads to a marginal improvement in terms of the prediction performance. Our EMNLP 2021 paper (arxiv.org/abs/2104.04886) proposes a new computational framework – SALT – for training large NLMs. SALT adopts a game-theoretic approach, which introduces a Stackelberg competition between a NLM and the adversarially perturbed training data. Such a competition encourages the NLM to make stable and robust predictions and significantly improves the generalization performance. Our experiments suggest that NLMs trained by SALT can outperform other conventionally trained ones that are several times larger.

If you were to focus on just one social application of your EMNLP work, what might it be?

My research interests in NLP mainly focus on developing new methodologies for some fundamental problems such as dialogue system and neural language models. I am not quite familiar with the social application. If I had to choose one social application, I guess I would be interested in chatbots for health screening because it can greatly help evolve triage and screening processes in a scalable and non-contact manner. This is particularly useful for dealing with infectious disease such as COVID-19.

Georgia Tech Authors

EMNLP ’21 | GLOBAL COMMUNITY

EXPLORE MAP

Special thanks to the EMNLP program committee for the collaboration

CLICK 🌐 IN PHOTO CAPTIONS TO EXPLORE THE ORAL, POSTER, AND FINDINGS PAPERS

Machine Learning Center at Georgia Tech

The Machine Learning Center was founded in 2016 as an interdisciplinary research center (IRC) at the Georgia Institute of Technology. Since then, we have grown to include over 190 affiliated faculty members and 60 Ph.D. students, all publishing at world-renowned conferences. The center aims to research and develop innovative and sustainable technologies using machine learning and artificial intelligence (AI) that serve our community in socially and ethically responsible ways. Our mission is to establish a research community that leverages the Georgia Tech interdisciplinary context, trains the next generation of machine learning and AI pioneers, and is home to current leaders in machine learning and AI.